Cloud native is a new degree of software abstraction, coupled with a set of dedicated technologies, tools, and processes that transform how applications can be built, deployed, and managed.

Characteristics of Cloud native application

- Code is packaged as containers

- Cloud native is architected as collections of microservices

- Developers and IT Operations work together in a tightly-integrated, agile capacity

- Cloud native is deployed and managed across elastic cloud infrastructure

- Automated and policy-driven infrastructure resource allocation

Benefits of Cloud native

Cloud native offers a variety of advantages that make it an ideal solution for modern organisations.

Faster application development and improved business agility

Organisations embarking on cloud native journeys are adopting containers and Kubernetes, along with a variety of open-source and commercial technologies from an expansive cloud native ecosystem. Containers and cloud native technologies enable organisations to accelerate application development, upgrade applications non-disruptively, scale them efficiently, and easily port them across different environments. These capabilities ultimately translate to better business agility and competitive advantage.

Solving critical enterprise issues with Cloud native containers

Containers solve some critical issues for enterprises by providing cloud native technologies. Run anywhere, robust software packaging for developers, service upgrades, scalability, availability, and resource efficiency in a vendor neutral package.

Efficient infrastructure management with Kubernetes

Kubernetes provides an infrastructure layer that IT can operate at scale in a programmatic, repeatable, fashion. This is especially beneficial for managing cloud native environments and cloud native applications, as opposed to a more hands-on management that is typically found in enterprise setups. Because Kubernetes supports on-prem and public cloud deployments, it provides a common operating model across environments. This makes Kubernetes an ideal platform for companies that operate in both cloud native environments and traditional enterprise settings.

Cloud native architecture

Cloud native architecture uses containers and Kubernetes to build applications as sets of smaller, composable components. These components scale efficiently, undergo non-disruptive upgrades, and migrate seamlessly to other environments, making them ideal for building cloud native applications. This technology is cost-efficient with better security and flexible automation.

The on-prem Kubernetes stack

Kubernetes is a cloud native container orchestration platform that is increasingly being used by organisations, both on-premises and in the cloud. We’ll explore the different layers of the Kubernetes stack on-prem, from the infrastructure that Kubernetes runs on, to the services running on top of Kubernetes that developers can leverage to accelerate application delivery.

Note that some components can be included in multiple parts of the stack. For instance, a Kubernetes distribution may include a Container Registry such as Harbor or Quay. Alternatively, a Container Registry may be offered as a hosted or managed service for use with any distribution.

Compute platform

Kubernetes is very flexible in where it can be deployed and innovation is expanding the options for how and where Kubernetes can be operated. Typically, Kubernetes requires a minimum 3 nodes with full network connectivity between them and attached storage. Today Kubernetes is most commonly run on top of virtual infrastructure like VMware. Bare Metal and HCI are two other common choices.In addition to the compute platform itself, there are a variety of cloud native services that can make provisioning and operating a Kubernetes cluster much easier. Infrastructure controlled via an API (e.g. Cluster API, Terraform, etc.) make it easy to provision and connect new nodes. Custom hypervisors may offer workload isolation to plug security holes in containers. A Load Balancer outside the Kubernetes cluster is useful for making workloads available and dynamically relocating and scaling them. Some workloads may benefit from custom hardware such as GPUs or TPUs. And if many clusters are to be operated at multiple locations (e.g. in retail stores) a distributed management capability for the underlying infrastructure would be useful.

Kubernetes distributions

With the explosive growth in Kubernetes since its release in 2015 there are over 100 Kubernetes distributions and hosted platforms from tech giants and startups alike. These companies take the core Kubernetes code and combine it with other projects such as a networking stack, cluster management tools, logging, monitoring, etc. to create a distribution.

Container services

A useful Kubernetes environment requires additional infrastructure services beyond Kubernetes itself. These are sometimes bundled with distributions or are added on as separate services and include some basics such as a container registry, Ingress, load balancer, Secret Stores, certificate manager, to name a few.

As more companies build and deploy applications on Kubernetes, there are many core services they depend on that typically also run in Kubernetes. Examples include databases (e.g. Redis, Postgres), Pub Sub (Kafka), Indexing (ElasticSearch), CI/CD services (Gitlab, Jenkins) and storage (Portworx, MayaData, Minio, Red Hat).

Many companies have developed offerings that will manage or support these cloud native applications running on a customer’s Kubernetes distribution of choice. Most notable is probably Microsoft’s Azure Arc Data Services which provides Postgres and MS SQL as managed services in customer environments but there are many others including Confluent for Kafka, Crunchy Data for Postgres, Redis Enterprise for Redis, Percona, Elasticsearch, Minio, Mayadata, etc.

Containers

Containers are the atomic unit of cloud native software architecture. A container comprises a piece of code packaged up with all of its dependencies (binaries, libraries, etc.) and is run as an isolated process. This translates to a new level of abstraction. Much like the virtual machine (VM) abstracts compute resources from the underlying hardware, a container abstracts an application from the underlying operating system (OS).

Containers are different from VMs in several important ways. They are significantly smaller in resource footprint, faster to spin up, and far easier to port across cloud environments. Unlike VMs, they are ephemeral in nature.Containers pave the way for microservices software architecture. While legacy applications are monolithic in nature, microservices-based applications are built as collections of smaller, composable pieces (services) that can scale independently and allow for non-disruptive upgrades. A service comprises one or more containers that perform a common function.

Containerised/microservices-based applications enable streamlined, accelerated software development practices that require tighter integration and coordination between developers and IT operations, referred to as DevOps.

Kubernetes

Because containerised applications lend themselves to distributed environments and use compute, storage, and network resources differently than do legacy applications, they require a layer of infrastructure dedicated to orchestrating containerised workloads.

Kubernetes has emerged as the dominant container orchestrator and is often characterised as the operating system of the cloud. It is a portable, extensible, open-source platform for managing containerised workloads and services, that facilitates both declarative configuration and automation.

Specifically, Kubernetes:

- Assigns containers to machines (scheduling)

- Boots the specified containers through the container runtime

- Deals with upgrades, rollbacks, and the constantly changing nature of the system

- Responds to failures (container crashes, etc.)

- Creates cluster resources like service discovery, inter-VM networking, cluster ingress/egress, etc.

Kubernetes is designed for scalability, availability, security, and portability. It optimises the cost of infrastructure, distributing workloads across available resources. Each component of a Kubernetes cluster can also be configured for high availability.

Kubernetes functionality evolves rapidly as a result of continuous contributions from its active global community, and there now exists a variety of Kubernetes distributions available to users. While users are best served by CNCF-certified distributions (conformance enables interoperability), intelligent automation around Kubernetes lifecycle management features, coupled with easy integration of storage, networking, security, and monitoring capabilities are critical for production-grade cloud native environments.

Cloud native challenges

Cloud native technologies are the new currency of business agility and innovation, but configuring, deploying, and managing them are challenging for any type of enterprise.

Speed of evolution

Kubernetes and its ecosystem of cloud native technologies is deep and evolves fast. Staying up to date with these rapid advancements requires continuous learning and adapting, which can be overwhelming and time-consuming for an organisation.

Legacy infrastructure incompatibility

Legacy infrastructure isn’t architected for the way Kubernetes and containers use IT resources. This is a significant impediment to software developers, who often require resources on demand, along with easy-to-use services for their cloud native applications.

Effectively managing all deployments

Every organisation ends up employing a mix of on-prem and public cloud-based Kubernetes environments, but benefitting from multicloud flexibility depends on being able to effectively manage and monitor all deployments.

Cloud native best practices

Building an enterprise Kubernetes stack in the datacentre is a major undertaking for IT operations teams. It is essential to run Kubernetes and containerised applications on infrastructure that is resilient and can scale responsively in support of a dynamic, distributed system.

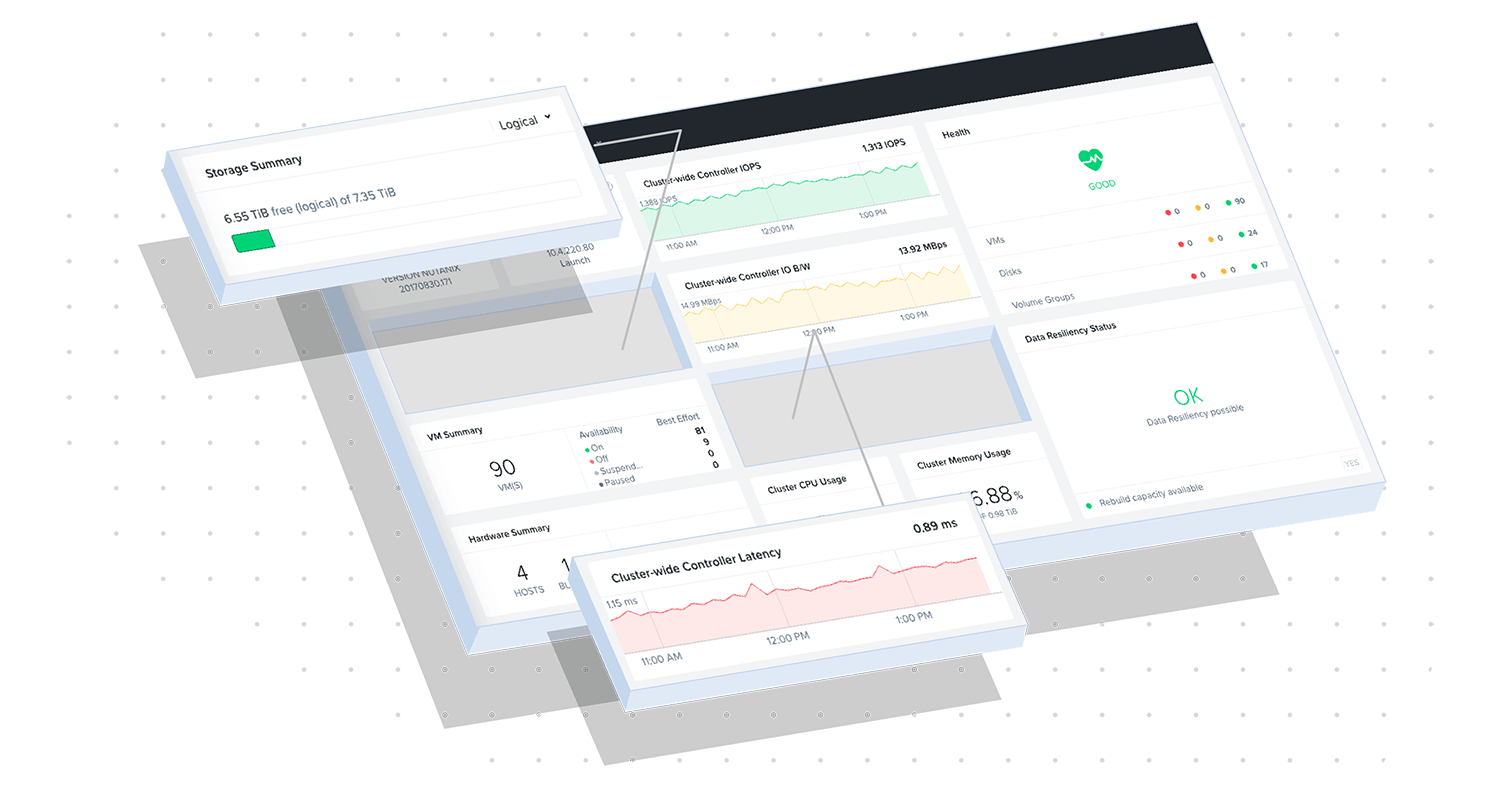

Nutanix hyperconverged infrastructure (HCI) is the ideal infrastructure foundation for cloud native workloads running on Kubernetes at scale. Nutanix offers better resilience for both Kubernetes platform components and application data, and superior scalability to bare metal infrastructure. Nutanix also simplifies infrastructure lifecycle management and persistent storage for stateful containers, eliminating some of the most difficult challenges organisations face in deploying and managing cloud native infrastructure.

Explore our top resources

Test drive Nutanix kubernetes engine

Build a best-in-class hybrid multicloud stack with Red Hat and Nutanix