A distributed file system, or DFS, is a data storage and management scheme that allows users or applications to access data files such PDFs, word documents, images, video files, audio files etc., from shared storage across any one of multiple networked servers. With data shared and stored across a cluster of servers, DFS enables many users to share storage resources and data files across many machines.

There are two primary reasons an enterprise would use a Distributed File System (DFS):

- To permanently store data on secondary storage media

- To easily, efficiently and securely share information between users and applications

As a subsystem of the computer’s operating system, DFS manages, organises, stores, protects, retrieves, and shares data files. Applications or users can store or access data files in the system just as they would a local file. From their computers or smartphones, users can see all the DFS’s shared folders as a single path that branches out in a treelike structure to files stored on multiple servers.

Distributed File System (DFS) has two critical components:

- Location transparency – this means users will see a single namespace for all the data files, regardless of which computer they’re using to access or store the files. Users won’t be able to tell where the file was initially stored and can move files around within the folders as needed without having to change the path name.

- Redundancy – through a file replication feature, DFS spreads copies of a file across the nodes of the cluster, which means data stays highly available, even in the event of a server failure.

How Distributed File Systems work

With Distributed File System (DFS), workstations and servers are networked together to create one parallel file system with a cluster of storage nodes. The system is grouped under a single namespace and storage pool and can enable fast data access through multiple hosts, or servers, simultaneously.

The data itself can reside on a variety of storage devices or systems, from hard disk drives (HDDs) to solid state drives (SSDs) to the public cloud. Regardless of where the data is stored, DFS can be set up either as a standalone (or independent) namespace, with just one host server, or domain-based namespace with multiple host servers.

When a user clicks a file name to access that data, the DFS checks several servers, depending on where the user is located, then serves up the first available copy of the file in that server group. This prevents any of the servers from getting too bogged down when lots of users are accessing files, and also keeps data available despite server malfunction or failure.

Through the DFS file replication feature, any changes made to a file are copied to all instances of that file across the server nodes.

Features of DFS

There are many DFS solutions designed to help enterprises manage, organise, and access their data files, but most of those DFS solutions include the following features:

- Access transparency – users access files as if they are stored locally on their own workstations

- Location transparency – host machines don’t need to know where the file data actually is because the DFS manages that

- File locking – the system locks down files in use across locations to prevent two users from different locations making changes to the same file at the same time

- Encryption for data in transit – DFS protects data by encrypting it as it moves through the system

- Support for multiple protocols – hosts can access files using a range of protocols, such as Server Message Block (SMB), Network File System (NFS), and Portable Operating System Interface (POSIX), to name just a few

Benefits of Distributed File System

The number one advantage of a distributed file system, or DFS, is that it allows people to access the same data from many locations. It also makes information sharing across geographies simple and extremely; efficient. DFS can completely eliminate the need to copy files from one site to another or move folders—all of which takes time and effort better spent elsewhere.

Other benefits of DFS include:

- Data resiliency – because files reside in more than one location, a server failure won’t signal disaster

- Network efficiency – heavy workloads won’t slow down the system because DFS can grab data from the next available node

- Access to latest information – changes made to shared folders or files are visible and available instantly to everyone who uses the DFS

- Simple scalability – growing the system simply means adding more nodes

- High reliability – data loss becomes much less of a concern with files replicated across hosts

Nutanix Received Two Gartner® Peer Insights™ Customers’ Choice Distinctions

Repeat HCI and Distributed File and Object Distinction

Distributed File System vs object storage

Similar to Distributed File System (DFS), object storage also stores information across many nodes of a cluster for quick, resilient, and efficient access to data. They both eliminate the potential “single point of failure.” But they are not the same thing.

DFS and object storage are different in several ways, including:

- Structure – instead of storing data files in a hierarchical structure like DFS does, object storage is made up of flat buckets of objects.

- API – as stated previously, DFS supports traditional file system protocols so it works with almost any application. Object storage requires the REST API, or representational state transfer API, which was designed for use on the web, as it relies on HTTP requests to access and use data. There are two specifications available for applications to access object storage - S3 specification developed by Amazon and Openshift Swift Api developed by Red Hat.

- Modification method – DFS allows users to make changes, or “writes,” anywhere in any data file. To make a change in object storage, users must completely replace an object.

Distributed File System examples

When it comes to finding a Distributed File System (DFS) solution, there are many options. They range from free, open-source software such as Ceph and Hadoop DFS, to remote-access options like AWS S3 and Microsoft Azure, to proprietary solutions such as Nutanix Files and Nutanix Objects.

The characteristics of DFS make it ideal for a range of use cases, especially because it’s particularly well-suited for workloads that require extensive, random reads and writes, and data-intensive jobs in general. That could include complex computer simulations, high-performance computing, log processing, and machine learning.

Explore our top resources

Nutanix again named a Visionary in the 2022 Gartner® Magic Quadrant™ for Distributed File Systems and Object Storage

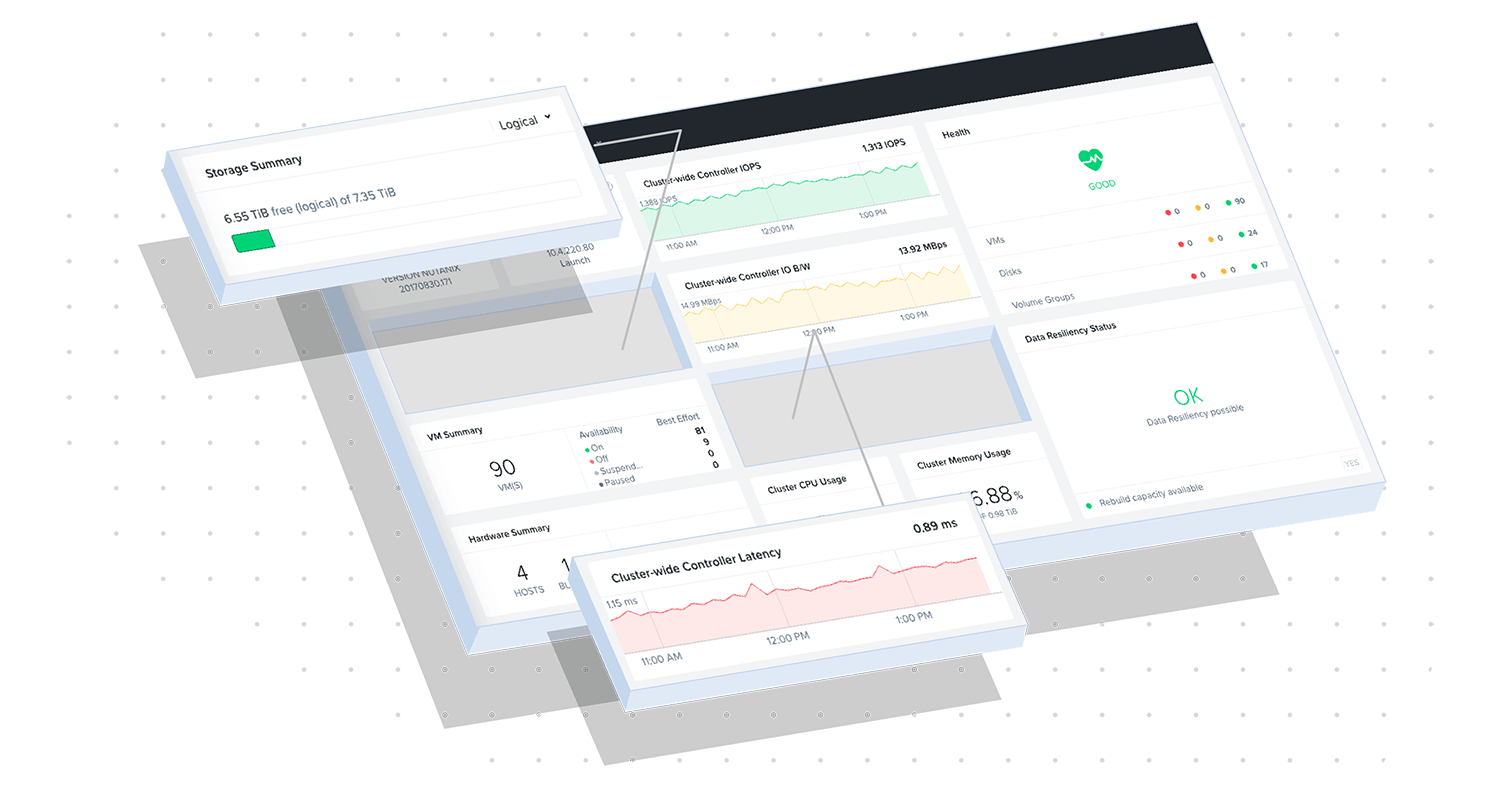

Files Storage

Discover how Files Storage creates a single platform for all your application needs.

Transform your Storage

Simplify the complex world of file storage with HCI and enjoy cloud-like deployment and provisioning.

Related products and solutions

Files Storage

Simple, scalable, and smart cloud-based file management.

Objects Storage

Simple, Secure, Scale-Out Cloud Object Storage.

Unified Storage

Manage and share unstructured data and replace storage silos that limit visibility and block access.