This weighty blog describes the use-cases and GPU options for Frame in public clouds and on-premises. It also presents how to right-size the GPU-powered workload VMs, the tools to use, and the common mistakes to avoid when using GPUs with Frame.

Playstation 5, RPi, Chromebook, Macbook, and Apple iPhone - what’s the common denominator?!

Nowadays every modern end-user device has either a Graphics Processing Unit (GPU) a hardware-based decoder, or both. You will find them when looking under the technology covers in your Chromebook, RPi, Windows Ultrabook, Playstation 5, Tesla Model S, Apple Watch, Oculus VR set, and latest Android smartphone. But why do GPUs and hardware-based encoders/decoders matter in the world of Desktop-as-a-Service (DaaS)?

Although GPUs and encoders/decoders serve different purposes in the end, it is all about delivering the best graphical user experience, supporting application APIs such as DirectX, OpenGL, and offloading the CPU for encoding/decoding to optimize resource consumption.

The goal here is to deliver the best user experience for those running operating systems such as Windows 10 and its Windows applications on both physical PCs and on “Cloud Workstations” delivered via DaaS solutions such as Nutanix Frame, Amazon Workspaces, Citrix Virtual Apps and Desktops Services, Microsoft Windows Virtual Desktops (WVD), and VMware Horizon Cloud.

GPUs are HOT - Games, VR, AI, Designers, and DaaS

Every technology geek wants to use the latest and greatest AMD or NVIDIA GPUs in their gaming PCs. Virtual Reality, GPUs with real-time raytracing capabilities, and unified communications with AI-based noise-canceling for business and entertainment are real, today! Cryptocurrency mining enthusiasts also use GPUs a lot and the latest developments around Machine and Deep Learning are sky-rocketing because of data, cloud, and GPU developments.

In the context of professional graphics, designers know the value of GPUs when running workstation applications from Adobe, Autodesk, Siemens, PTC, Dassault Systemes, and more. Also, the current Windows 10 operating system, browsers, video conferencing tools such as Teams and Zoom, and Microsoft Office 365 all benefit when the physical or virtual machine is equipped with a GPU.

GPUs - yay!?

Why, then, aren’t all the millions of virtual desktop and application users worldwide using GPUs? Are GPUs only for the special “cloud workstation” use-cases? (Hint: No). Are they for using remoting protocols to deliver virtual desktops and applications that can use the GPU and deliver the best user experience? (Hint: Yes).

Modern DaaS remoting protocols, such as Nutanix Frame Remote Desktop Protocol (FRP) are able to use hardware- (GPU) based encoding, delivering an awesome user-experience to “run any application in the browser” while offloading the CPU. This is one great benefit of leveraging GPUs or vGPUs, the other benefit is the ability to support application APIs such as OpenGL, DirectX, and CUDA.

Customers often ask the question, “do I really need GPUs for all my applications and my use-cases, including single task and knowledge workers?”

Some say, “GPU4All,” but in my opinion there always is some nuance. The fact is that DaaS with Windows 10, using the latest AMD and Intel CPUs, can work well without GPUs. There are in fact large, 90K+ customer deployments using virtual desktops and applications using CPU-only configurations.

Of course, right-sizing the infrastructure and virtual machines using common practices is essential here. More insights about sizing can be found in this blog post.

Key questions to be asked are:

- Does a “CPU only” configuration provide the best user experience? What are the applications used and do they benefit from the GPU?

- What is the right balance between user-experience and costs? What are the current and proposed costs?

- Is the CPU-only configuration future-proof? The Windows OS is expecting “GPU inside” and also more and more applications, including Windows, browsers, Microsoft Teams, Zoom, and Microsoft Office are benefiting from GPU functionality.

CPU, GPU, vGPU, Passthrough GPU - What to Choose?

The Frame Desktop-as-a-Service solution has several strong processing options available for your “Cloud PC” and “Cloud Workstation” use-cases. These include CPU-only, NVIDIA Virtual GPU (vGPU aka GRID), AMD GPU (MxGPU) with Virtual Functions (VF), and passthrough GPU options from AMD and NVIDIA.

Selecting the optimal GPU option depends on the expected user experience, the use case, the application requirements, and the business case. (Note: to learn why GPUs matter for virtual desktops and applications, check out this great solution brief).

Dedicated and Virtual GPUs

Let’s dive a bit deeper into the GPU options Nutanix Frame can use in both public clouds and on-premises. From a GPU perspective, there are two technology directions:

- Dedicated GPUs

- Virtual GPUs (vGPUs)

Dedicated GPUs

Dedicated GPUs are also known as GPU “pass-through” or “DDA” (Discrete Device Assignment). This means the Virtual Machine (VM) has a dedicated “full GPU” at its disposal.

If the GPU board has multiple GPUs per board available, each VM can access its own dedicated GPU. For instance, the NVIDIA Tesla M10 has four GPUs available, which means four VMs can be powered on and access a GPU in a 1:1 mapping. When the GPU board only has one GPU available, one VM can be powered-on and use that GPU. Commonly used GPUs such as NVIDIA Tesla T4, P40, and RTX8000 are powerful GPUs, but do have one GPU per board available.

Dedicated GPU Options in Public Cloud

The typical GPU-based instances on Microsoft Azure, AWS, and Google Cloud as of today are using dedicated GPUs. Common instance families are Azure NV (NVIDIA-based), AWS G4 (AMD & NVIDIA GPU-based), and the GCP (NVIDIA-based) instances. These instances are all using dedicated “passthrough/DDA” GPUs and the NVIDIA-based instances include the NVIDIA vGPU licenses. Some of these instance families have machines with multiple dedicated GPUs, supporting high-end workstation applications, such as Autodesk VRED with RealTime raytracing.

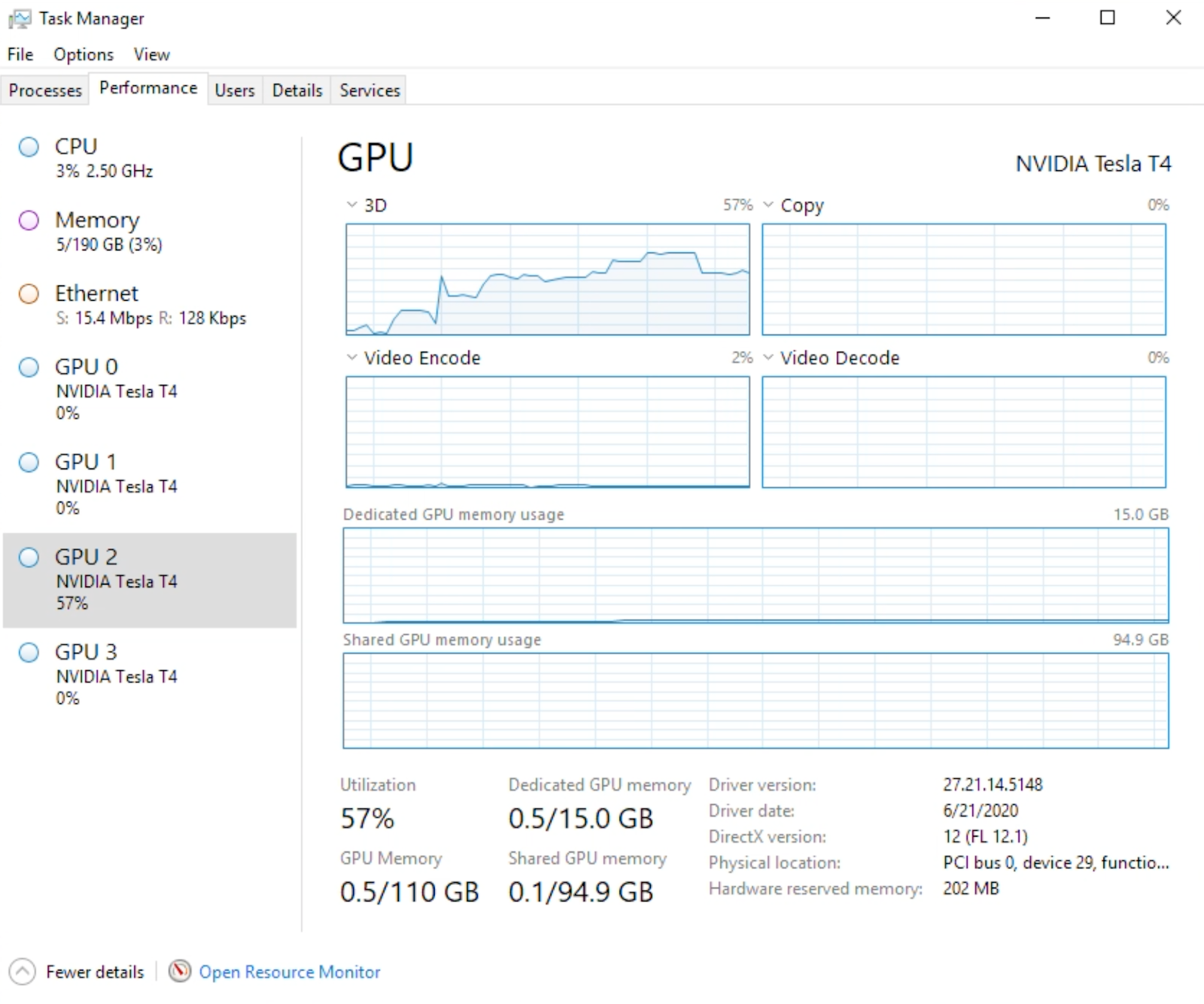

(Figure 1: Frame powered instance with 4 dedicated NVIDIA TESLA T4 GPUs)

These dedicated GPU instances provide consistent and high performance because the GPUs aren’t shared with others. The GPU frame buffer (memory), cores, and encoders/decoders are all dedicated to the instance. The downside is that, from an infrastructure perspective, you cannot share the GPU with others, and therefore share the running costs with other users.

Overall in the public cloud, the GPU options and available GPU boards suitable for DaaS are running behind as compared to on-premises options. For instance, NVIDIA vGPUs are not available on Azure, AWS, Google Cloud, and the GPU boards are a few years behind. That being said, many customers use these instances for DaaS, benefiting from pay-as-you-go pricing, multiple cloud regions, the flexibility to spin-up hundreds or thousands of VMs in a matter of minutes and hours, and more.

It is good to note that Microsoft Azure, AWS, and Google Cloud provide access to the latest generation GPUs, but these instances are targeted for “Deep Learning” and “Computing” and not for “Virtualization/DaaS” since they don’t include the NVIDIA licenses needed for flexible Desktop as a Service.

Dedicated GPU Options in an On-prem Deployment

In an on-premises DaaS scenario, it is uncommon to use dedicated GPUs, because of limited flexibility and scalability. it is more common to use vGPUs with workstation-class GPU profile characteristics.

Virtual GPUs in an On-prem Deployment

The term “Virtual GPUs” can mean different things to different people. In this context, it means that the virtual machine will receive “a slice” of the GPU which is handled by a lower-level software or hardware component.

The “slicing”, “partitioning,” or virtualization of the GPU can be done in software, hardware or a combination of both, resulting in a hybrid approach. For instance, NVIDIA vGPU Manager software in combination with SR-IOV, running on Nutanix AHV is an example of a hybrid setup. In this scenario, different pre-defined vGPU profiles are available at the hypervisor (e.g., Nutanix AHV) level, which can be used by the VMs.

The slicing technology used by AMD is leveraging SR-IOV, creating Virtual Functions to be used for the VMs. Questions such as how much GPU frame buffer and GPU cores should be made available for each VM, and how strict the GPU scheduler configuration should be are beyond the scope of this blog. The key is to understand the performance and capabilities of vGPU profiles and Virtual Functions and understand the NVIDIA vGPU licensing options.

If you want to read more about NVIDIA vGPU software licensing and the capabilities provided by the software, the NVIDIA vGPU licensing and packaging guide is a must-read.

Virtual GPU Options in Public Cloud

At this point there is only one Virtual GPU option available in the public cloud. This option is available on Microsoft Azure and is powered by AMD. The Azure NVv4 instance family is powered by AMD Radeon Instinct MI25 GPUs and AMD EPYC Rome CPU. More detailed information can be found in this blog post “Introducing Azure NVv4 and Nutanix Frame” (be sure to check out the video in this blog; you will see Frame in action with the NVv4 machine in a WAN - 100ms latency - scenario). Unfortunately, there isn’t NVIDIA vGPU available in Microsoft Azure, AWS, or Google Cloud. However, AliCloud does support NVIDIA vGPU.

Sizing and GPU Tools for your Toolbox

It is important to know how the remoting protocol, the operating system, and the applications are using the GPU. The key is to capture the utilization of GPU cores, frame buffer, and encoders for a decent amount of time. With that information, the right-sizing of the workload machines can start.

Fortunately there are various solutions available from partners that help you easily monitor and capture GPU/application usage. Examples include ControlUP, LiquidWare, LakeSide, and UberAgent.

Besides these solutions, there are also free and community tools available, such as RD Analyzer, GPU profiler, GPU-Z, and Windows Performance Monitor in Windows10 and Server2019/2022+

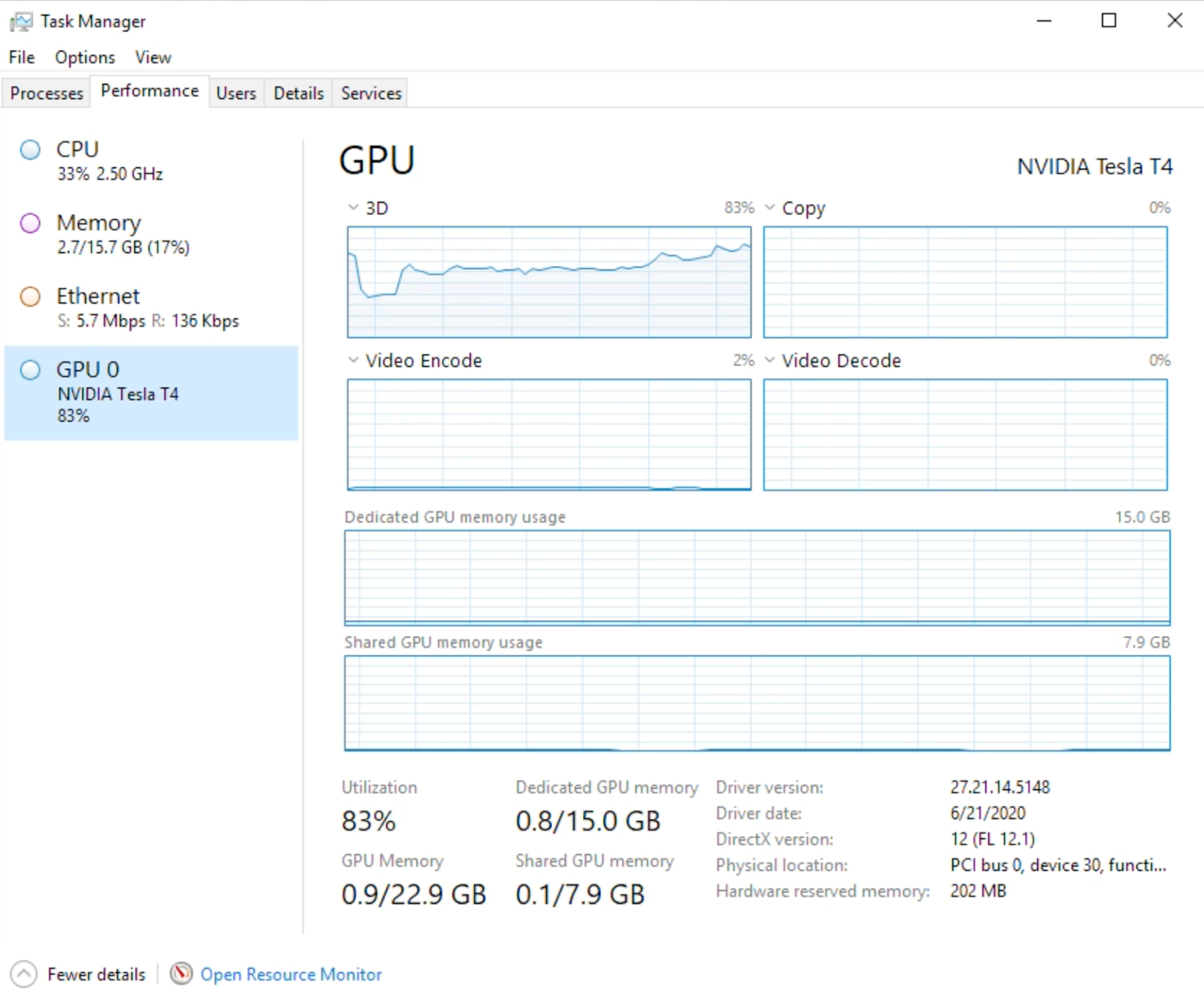

Figure 2: using task manager in Windows10/Server 2019+ to provide GPU utilization insights

(Figure 3: Frame running with GPU Profiler, providing insights in GPU Utilization)

Common GPU Mistakes and Tips to Avoid Them

The bad news is there are lots of possible mistakes. The good news is I share straightforward tips on how to avoid them!

- Mistake: Sizing your GPUs without any OS and application usage insights.

Tip: Sizing without usage insights is like driving your car in the dark without the lights on; it’s dangerous and stupid. Use tools to get this data. - Mistake: Using the specifications of your physical PC or Workstation and 1:1 map them to DaaS workload VMs.

Tip: Utilization of physical resources is very often much lower - don’t size for the peaks. Again, use tools to get these utilization insights to improve your sizing. Very often the frame buffer is the first limit you will reach with GPUs. - Mistake: Capturing GPU utilization for one hour is fine; I am in a hurry.

Tip: Capture utilization for a longer period of time to make sure the data set is complete and that you’ve captured enough information. - Mistake: Expect when you have two GPUs in your VM that applications perform twice as fast.

Tip: 99% of the applications aren’t multi-GPU capable. Better to use a modern state-of-the-art GPU. - Mistake: I bought NVIDIA TESLA GPUs for my on-premises Frame with Nutanix AHV deployment - I am good to go!

Tip: Don’t forget the right NVIDIA vGPU software licenses, Hardware + Software is the complete solution here. - Mistake: We are totally fine with Windows 10 and using a 1 GB Frame Buffer of the NVIDIA vGPU Profile.

Tip: Maybe you are, but are you sure? When you are using multiple monitors, higher resolution, and of course demanding “Power User” applications a frame buffer of 1 GB might be not enough. A good starting point is: 1 GB frame buffer for Windows 10 with productivity applications; 2 GB frame buffer for dual monitor setup; 4 GB vGPU frame buffer for medium to high-end graphics. - Mistake: The endpoint device doesn’t have an impact in user experience since applications are running virtually; the endpoint is only a display device.

Tip: The capabilities of the endpoint do have an influence on the actual user experience. What are the hardware decoding capabilities? What are the capabilities with regards to multiple monitors and higher resolution? Is it possible to run the latest browser or Frame App on the end-point? - Mistake: Performance and availability of GPUs in the public cloud. Sure. No problem.

Tip: Understand the different GPU options, and GPU hardware characteristics, and understand that availability of resources and guaranteed capacity isn’t always a given! Be sure to raise your GPU limits with your cloud provider well ahead of when you need those resources. Sometimes it can take days or more to get your limits raised.

Happy Reading!

Do you want to read more about DaaS from Nutanix, and discover how Frame is different from Amazon, Citrix, Microsoft, or VMware? Well, be sure to check out this blog! Interested in a wide and deep perspective on all things DaaS and Frame? My blogs are available and visible in one simple overview here.

Ruben Spruijt - Sr. Technologist, Nutanix - ruben.spruijt@nutanix.com @rspruijt

© 2021 Nutanix, Inc. All rights reserved. Nutanix, the Nutanix logo and all Nutanix product and service names mentioned herein are registered trademarks or trademarks of Nutanix, Inc. in the United States and other countries. Other brand names mentioned herein are for identification purposes only and may be the trademarks of their respective holder(s). This post may contain links to external websites that are not part of Nutanix.com. Nutanix does not control these sites and disclaims all responsibility for the content or accuracy of any external site. This post may contain express and implied forward-looking statements, which are not historical facts and are instead based on our current expectations, estimates and beliefs. The accuracy of such statements involves risks and uncertainties and depends upon future events, including those that may be beyond our control, and actual results may differ materially and adversely from those anticipated or implied by such statements. Any forward-looking statements included herein speak only as of the date hereof and, except as required by law, we assume no obligation to update or otherwise revise any of such forward-looking statements to reflect subsequent events or circumstances.