What is containerization?

Containerization is an approach to software engineering that involves packaging all the necessary elements to run an operating system (OS) in an isolated digital “container” so it can run pretty much anywhere on a virtual basis. A container can be deployed to a private datacenter, public cloud, or even a personal laptop—regardless of technology platform or vendor.

What are the benefits of containerization?

Containerization delivers a number of benefits to software developers and IT operations teams. Specifically, a container enables developers to build and deploy applications more quickly and securely than is possible with traditional modes of development. Without containers, developers write code in a specific environment, e.g., on the Linux or Windows Server “stack.” This approach can cause problems if the application has to be transferred to a new environment, such as one running a different version of the OS, or from one OS to another.

By placing the application code in the “container” together with the necessary OS configuration files, libraries and other dependencies it needs to run, the container is abstracted from whatever OS is hosting it—becoming portable in the process. The application can move across platforms with few issues. Containerization also helps abstract software from its runtime environment by making it easy to share CPU, memory, storage, and network resources.

Other benefits of containerization include:

- Security - Containers are isolated in the host environment, so they are less vulnerable to being compromised by malicious code. In addition, security policies can block containers from being deployed or communicating with each other, which safeguards the environment.

- Agility - When orchestrated and managed effectively, containers make it possible to be more agile in IT. Developers and IT operations teams can typically deploy containers more quickly than is possible with tradition software.

- Speed and efficiency - Most containers are “light,” meaning they comprise fewer software resources than their traditional software counterparts. As a result, they tend to run more quickly and use system resources more efficiently.

- Fault isolation - Each container functions independently. This creates a barrier between containers that isolates faults, with the result that a fault in one container does not affect how other containers function.

Containerization and cloud native applications

Containerization is often a key enabler for building cloud-native applications because containers provide the lightweight and portable runtime environment required for deploying microservices at scale in the cloud. As a result, containerization and cloud-native applications are closely intertwined, with many cloud-native applications being built and deployed using containerization technologies like Docker and Kubernetes.

Containerization offers several benefits for cloud-native applications:

- Cost efficiency - This pay-per-use model and open-source system allows DevOps teams to only pay for the backup, maintenance, and resources they use.

- Better security - Cloud native applications use two-factor authentication, restricted access and sharing only relevant data and fields.

- Adaptability and scalability - Cloud native applications can scale and adapt as needed to allow for fewer updates and can grow as the business grows.

- Flexible automation - Cloud native applications allow DevOps teams to collaborate with CI/CD processes for deployment, testing, and gathering feedback. Organizations can also work on multiple cloud platforms, whether it’s public, private, or hybrid for enhanced productivity and customer satisfaction.

- Removes vendor lock-in - DevOps teams can work with multiple cloud providers on the same cloud native platform, eliminating vendor lock-in.

- Enhanced containerization technology - Application containerization works across Linux and select Windows and Mac OS which includes bare-mental systems, cloud instances, and virtual machines. These applications can run on a single host and access the same operating system through this virtualization method.

Containerization technology vs virtualization

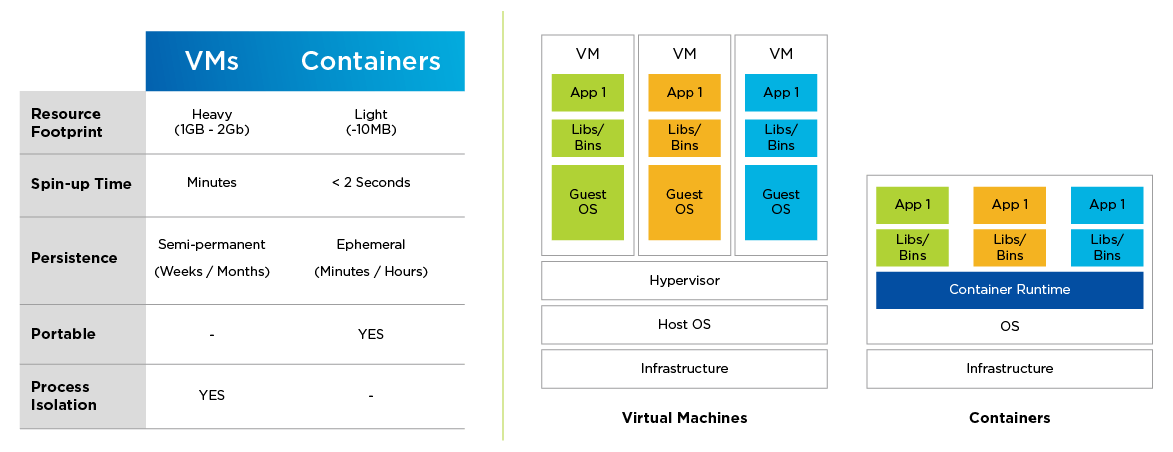

While container adoption is rapidly outpacing the growth of virtual machines (VMs), containers likely won’t replace VMs outright. In general, containerization technology drives the speed and efficiency of application development, whereas virtualization drives the speed and efficiency of infrastructure management.

At a glance, here is a comparison of containers and VMs across several common criteria:

What is container orchestration?

Container orchestration involves a set of automated processes by which containers are deployed, networked, scaled, and managed. The main container orchestration platform used today is Kubernetes, which is an open-source platform that serves as the basis for many of today’s enterprise container orchestration platforms.

What are the types of container technology?

There are many different container technologies. Some are open source. Others are proprietary, or proprietary add-ons to open-source solutions. Here are some of the most commonly used container technologies.

Docker

Docker is a large-scale multifaceted suite of container tools. Docker Compose enables developers to build containers, spinning up new container-based environments relatively quickly. With Docker, it is relatively simple to get an application to run inside a container. Docker integrates with the major development toolsets, such as GitHub and VS Code. The Docker Engine is able to run on Linux, Windows and Apple’s MacOS.

From there, Docker lets developers share, run and verify containers. A Docker container can run on Amazon Web Services (AWS) Elastic Compute Cloud (ECS), Microsoft Azure, Google Kubernetes Engine (GKE), and other platforms. One advantage of Docker is that the development environment and runtime environment are identical. There are few issues switching back and forth, which saves time and reduces complications in the development lifecycle.

Linux

The Linux operating system enables users to create container images natively, or through the use of tools like Buildah. The Linux Containers project (LXC), an open-source container platform, offers an OS-level virtualization environment for systems that run on Linux. It is available for many different Linux distributions. LXC gives developers a suite of components, including templates, libraries, and tools, along with language bindings. It runs through a command line interface.

Kubernetes

Kubernetes is a portable, extensible, open-source platform for managing containerized workloads and services, that facilitates both declarative configuration and automation. It has a large, rapidly growing ecosystem. Kubernetes services, support, and tools are widely available. Kubernetes provides users with:

Service discovery and load balancing

Storage orchestration

Automated rollouts and rollbacks

Automatic bin packing

Self-healing

Secret and configuration management

Containerization vs microservices

Containers and microservices are similar and related constructs that may be used together, but they differ in several key ways. A microservice, the modern realization of the service-oriented architecture (SOA) paradigm, combines all of the functions needed for an application in a discrete unit of software. Typically, a microservice has one small job to do, in concert with other microservices. For example, one microservice processes logins, while another delivers the user interface (UI), and so forth. This approach helps with agility and resiliency.

Here’s the difference: Microservices represent an architectural paradigm. Containers, on the other hand, represent one specific way of implementing the microservices paradigm. Organizations may choose to use containers to implement a microservices architecture because of performance, security, and manageability concerns.

What is containerization in the cloud?

The Kubernetes ecosystem is broad and complex and no single technology vendor offers all of the components of a complete on-prem modern applications stack. Beginning with the innovative approach to infrastructure that Nutanix pioneered with HCI and AOS, Nutanix has several core competencies that are both rare and difficult to replicate that offer differentiated value to customers.

Nutanix’s primary technology strengths for building on-prem Kubernetes environments include:

Distributed systems management capabilities

Integrated storage solutions covering the three major classes: Files, Volumes, and Objects storage

Nutanix Kubernetes Engine - Fully-Integrated Kubernetes management solution with native Kubernetes user experience

We believe Nutanix hyperconverged Infrastructure (HCI) is the ideal infrastructure foundation for containerized workloads running on Kubernetes at scale. Nutanix provides platform mobility giving you the choice to run workloads on both your Nutanix private cloud as well as the public cloud. The Nutanix architecture was designed keeping hardware failures in mind, which offers better resilience for both Kubernetes platform components and application data. With the addition of each HCI node, you benefit from the scalability and resilience provided to the Kubernetes compute nodes. Equally important, there is an additional storage controller that deploys with each HCI node which results in better storage performance for your stateful containerized applications.

The Nutanix Cloud Platform provides a built-in turnkey Kubernetes experience with Nutanix Kubernetes Engine (NKE). NKE is an enterprise-grade offering that simplifies the provisioning and lifecycle management of multiple clusters. Nutanix is about customer choice, customers can run their preferred distribution such as Red Hat OpenShift, Rancher, Google Cloud Anthos, Microsoft Azure, and others, due to the superior full-stack resource management.

Nutanix Unified Storage provides persistent and scalable software-defined storage to the Kubernetes clusters. These include block and file storage via the Nutanix CSI driver as well as S3-compatible object storage. Furthermore, with Nutanix Database Service, you can provision and operate databases at scale.

Related articles:

Explore our top resources

Take a Test Drive

Test drive Nutanix Cloud Platform across hybrid multicloud environments

4 Ways Nutanix and Red Hat Address Modern IT Challenges

Organizations need the benefits of cloud-native solutions, containerization at scale, and moving IT to the network edge to stay competitive.

5 benefits of using HCI for Kubernetes

Kubernetes, containers, and cloud native technologies are the key components of digital transformation. Together, they enable companies to build and deploy applications in innovative and e cient new ways.