What is server virtualization?

Server virtualization is a process through which an organization can separate server software from its hardware and create multiple virtual servers with their own operating systems and applications that run on a single physical server. Each virtual server is separated from the others and runs completely independently without compatibility issues. Server virtualization is the underlying basis for cloud computing and enables a variety of hybrid cloud models.

By virtualizing its servers, an organization can cost-effectively use or provide web hosting services and make the most of its compute, storage, and networking resources across its entire infrastructure. Because servers rarely use their full processing power around the clock, a lot of server resources aren’t used. In fact, some experts say it isn’t uncommon for a server to be just 15% to 25% utilized at any one time. Servers can sit idle for hours or days as workloads are distributed to a small percentage of an organization’s entire server collection. These idle servers take up precious space in the data center and consume power and IT worker attention and effort in maintaining them.

Through server virtualization, an organization can load a single physical server with dozens of virtual servers (also called virtual machines, or VMs) and ensure that that server’s resources are being used more effectively. Now, a data center can be more efficient with fewer physical machines. Through virtualization, organizations can easily keep resources utilized and dynamically adapt to each workload’s needs as it changes.

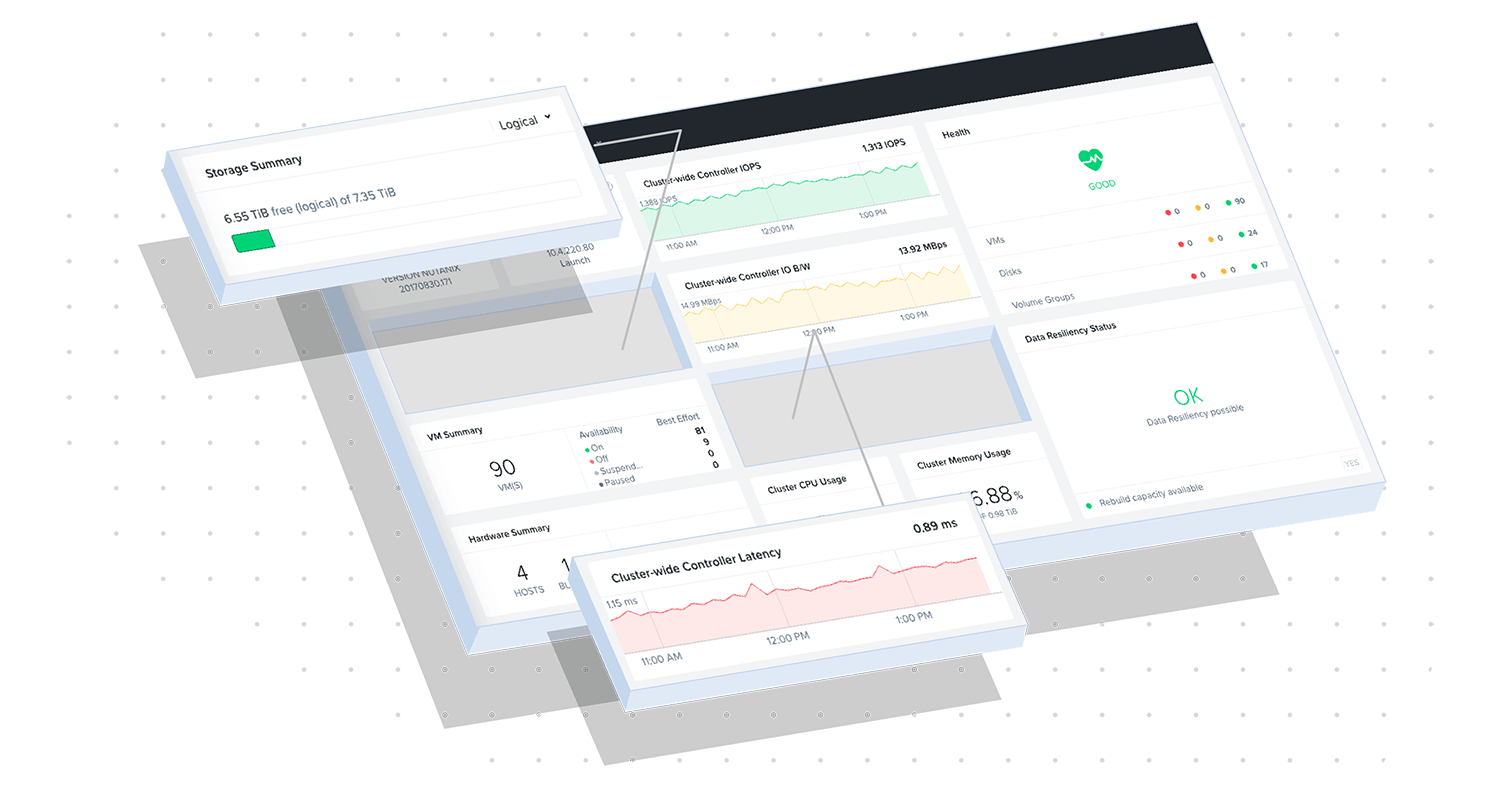

Mission Control

Mission Control gives you everything you need for success with AHV Virtualization, from migration and day-one basics to advanced objectives.

What is a virtual server?

A virtual server is a virtualized “instance” on a dedicated physical server. It is an isolated space with its own operating system, policies, applications, and services. Although it acts independently, it resides on a single server with a number of other virtual machines and shares software and hardware resources with those other machines through the use of a hypervisor. Each virtual server is considered a guest of the main physical server, which is the host.

How server virtualization works

An IT administrator can create multiple virtual machines on a single physical server and configure each one independently. The admin does this by using a hypervisor, which is also sometimes referred to as a virtual machine monitor. In addition to enabling the isolation of the server’s software from its hardware, the hypervisor acts as a controller and organizes, manages, and allocates resources among all the virtual machines on the host server.

Through abstraction, the hypervisor organizes all the computer’s resources—such as network interfaces, storage, memory, and processors—and gives each resource a logical alias. The hypervisor uses those resources to create virtual servers, or VMs. Each VM is made up of virtualized processors, memory, storage, and networking tools, and while it resides alongside multiple other VMs, it doesn’t “realize” it’s just one of many. It acts completely independently as if it were a single physical server.

Now the organization has a physical server with multiple separate, fully functional computers working within it. The host server can have VMs with differing OSes and a wide variety of applications and systems that might not normally work together.

As the hypervisor monitors and oversees all the host server’s VMs, it can also reallocate resources as needed. If one VM is idle overnight, for instance, its computing and storage resources can be reassigned to another VM that might need extra resources during that time. In this way, the server’s resources are much more fully utilized overall.

VMs can also be moved to other host servers if needed through a simple duplication or cloning operation. The only limit to how many VMs a host server can accommodate is the computer resources it has, so newer computers with more resources can naturally support more VMs.

Server virtualization is a critical component in cloud computing. In fact, many experts consider server virtualization one of the main pillars of cloud computing (along with other components such as automation, self-service, and end-to-end monitoring). Virtualization makes cloud computing possible because it allows a server’s resources to be divvied up among multiple VMs and allows organizations to get the ultra-fast scaling they need without giving up workload isolation.

Types of server virtualization

There are three types of server virtualization, and the difference primarily lies in how isolated each VM is.

- Full virtualization – This type of server virtualization most closely resembles what has been described above. A physical host server’s resources are divided up to create multiple virtual machines that are completely separated from each other and act independently without any knowledge of the other VMs on the host. The hypervisor for this type is often called a bare-metal hypervisor because it is installed directly onto the physical hardware and acts as a layer between the hardware and the VMs and their unique operating systems. The only possible downside to this type is that the all-important hypervisor comes with its own resource needs and can sometimes cause a slowdown in performance. With full virtualization, the host server does not need to have an operating system.

- Para-virtualization – In this type, VMs aren’t completely unaware of each other on the host server. While the VMs are isolated to a degree, they still work together across the network. Because the VMs do some of the work of allocating resources themselves, the hypervisor requires less processing power to manage the entire system. Para-virtualization requires the host server to have an operating system and it must work together with the hypervisor through hypercall commands, which then allows the hypervisor to create and manage the VMs. This type emerged in response to the performance issues experienced by early bare-metal hypervisors. Instead of being installed directly onto the bare physical hardware of the host server, the hypervisor becomes a layer between the host server’s OS and the VMs. Today, para-virtualization is less commonly used because modern servers are now being designed to more effectively support and work with bare-metal hypervisors.

- OS-level, or hosted, virtualization – This type of virtualization completely eliminates the need for a hypervisor. All of the virtualization capabilities are enabled by the host server’s operating system, which stands in for the hypervisor. One limitation of this type is that while each VM can still operate independently, they all must use the same OS as the host server. That means they also share the host OS’s common binaries and libraries. While considered the most basic method of server virtualization, it can also be managed and maintained with fewer resources than the other types. By not having to duplicate an OS for every VM, this OS-level virtualization makes it possible to support thousands of VMs on a single server. A disadvantage, however, is that this type results in a single point of failure. If the host OS is attacked or goes down for any reason, all the VMs are affected as well.

Server virtualization benefits

Benefits of server virtualization include:

- More efficient use of server resources with less need (and cost) of physical hardware

- Cost savings through server consolidation, reduction of hardware footprint, and elimination of wasted or idle resources

- Enhanced server versatility with the flexibility of creating VMs with different OSes and applications

- Increased application performance, thanks to the ability to use VMs for dedicated workloads

- Deploy workloads quicker with fast, easy VM duplication and cloning, and flexibility to move VMs to different host servers if needed

- Increased IT productivity and efficiency by reducing server sprawl and complex management and maintenance of a large number of physical servers

- Added disaster recovery and backup benefits with easy replication of existing VMs, snapshots, and ability to move them as desired

- Lower energy consumption by reducing the number of physical machines that take up space and need cooling and power

- Reduced security threats due to each VM on a host server being isolated; if one VM is attacked, the others aren’t necessarily compromised

Server virtualization challenges

Despite the many benefits of server virtualization, there are still some challenges:

- Software licensing can be complex (and costly) because one physical server might host dozens of different VMs with a wide range of applications and services; for instance, full server virtualization means each VM has its own OS, and each OS requires a separate license

- A host server failure can adversely affect all of its VMs—so 10 applications go down instead of just one, for instance

- VM sprawl can become an issue if IT doesn’t stay on top of where VMs are and how and when they’re being used; it’s so easy to spin up new instances, for instance, that many VMs are used temporarily for testing and then abandoned when no longer needed. But if they’re not actually deleted from the system they could remain in the background and continue to consume power and resources that active VMs need

- If VMs aren’t planned and created with forethought and planning, server performance can slow down if there are too many VMs on the host that need a lot of resources, especially when it comes to networking and memory

Is server virtualization secure?

Server virtualization does have some inherent advantages when it comes to security. For instance, data is stored in a centralized place that is fairly simple to manage, instead of being left on unauthorized or less-secure edge or end-user devices. The isolation between VMs also helps keep attacks, malware, viruses, and other vulnerabilities isolated as well.

Thanks to virtualization’s granular access control, IT has a higher degree of control over who is able to get to the data stored in the system. Micro-segmentation is often employed to give people access only to specific applications or resources down to the level of a single workload. Also, virtualizing desktops helps ensure that IT personnel stays responsible for updating and patching operating systems and applications—something end users might not stay on top of individually.

Hypervisors reduce security risks with a reduced attack surface in comparison with hardware solutions, thanks to their ability to run on fewer resources. They also update automatically, which helps keep them protected from evolving threats.

On the other hand, server virtualization can present some security risks as well. One of the most common is simply the increased complexity of a virtualized environment. Because VMs can be duplicated and workloads moved to different locations pretty easily, it’s more difficult for IT to adhere to security best practices or even keep to consistent configurations or policies throughout the entire ecosystem.

VM sprawl can also pose a security risk. Those idle and abandoned VMs not only continue to consume resources and power, but they also aren’t likely to be patched or updated—which leaves them vulnerable and a good potential attack risk.

While the isolation between VMs can reduce security risks in one way, it still doesn’t reduce the effect of a distributed denial of service (DDoS) attack. If a DDoS attack affects a VM’s performance by hitting it with a flood of malicious traffic, the other VMs that share that host server’s resources will be affected as well.

IT can reduce the security risks server virtualization presents with some best practices, which include keeping all software and firmware up to date across the entire system, installing and updating antivirus and other software designed for virtualization solutions, stay on top of who’s accessing the system, encrypting network traffic, deleting unused VMs, doing regular backups for VMs and physical servers, and defining and implementing a clear and detailed user policy for VMs and host servers.

Use cases for server virtualization

- Data center consolidation – By virtualizing servers, an organization can reduce its need for physical hardware and also cut down on power and cooling costs.

- Testing environments – It’s so easy to spin up new VM instances and provision them that many organizations are using them for development and testing initiatives.

- Desktop virtualization – Virtualized desktop infrastructure offers the benefits of flexibility, centralized management, increased security, and simplicity.

- Backup and disaster recovery – Virtualization is a great way to approach backup and disaster recovery because it makes it simple to make backups and take snapshots of VMs that can be quickly recovered if disaster strikes.

- Cloud computing – Cloud computing relies heavily on virtualization and automation.

- Increased availability – Live migration of VMs allows organizations to move a VM from one physical server to another without disrupting services. Virtualization also enables business-critical systems and applications to stay up and running even during maintenance cycles or when testing new developments.

- Support for multiple platforms – With virtualization, organizations can run a variety of workloads with differing operating systems without the need for OS-dedicated hardware.

Implementing server virtualization

When planning to implement server virtualization across an organization, there are some important steps to keep in mind. The following are some best practices that can help:

- Create a plan – Make sure all stakeholders have a good understanding of how and why the organization needs a virtualization platform before diving into a full-blown initiative. Consider the costs and potential complexities. How does it fit into your business plan?

- See what’s out there – It’s important to assess the hardware and solutions to get a sense of the scope of your project. What solutions are available? What are your competitors using? Due diligence in this step will go a long way toward a successful implementation.

- Test and experiment – You should try out any potential solution to see how it works in the real world and how it affects your day-to-day operations. Can IT easily manage the work it will create? IT should feel comfortable with any solution before making a purchase decision. They’ll be the ones managing it and keeping it running and should be well aware of the potential pitfalls and challenges any solution will present.

- Consider business needs – Does the proposed solution meet your organization’s unique virtualization needs? How will it affect your IT infrastructure’s security, compliance, disaster recovery plans, and so on? IT should have a deep understanding of the solution’s implications across your entire ecosystem.

- Start small, then scale – If your organization is new to virtualization, it’s smart to experiment with a small deployment on non-critical systems so IT can learn what is required to run and manage it day to day.

- Develop a set of guidelines – You’ll need to put in some thought about VM provisioning, as well as their lifecycles and how they’ll be monitored. Guidelines will help you stay within budgets, avoid wasting resources and VM sprawl, and adhere to agreed-upon behaviors and responsibilities to maintain the system.

- Select the right tools – Even after deciding on a virtualization platform, you’ll need to consider obtaining additional tools to help you leverage the more advanced features of the solution and better manage the system.

- Don’t forget about automation – Make sure IT staff have a good understanding of automation practices and tools because automation goes hand-in-hand with virtualization.

What are some best practices for managing VMs?

While one of the benefits of server virtualization is that it centralizes and simplifies server management, it can also bring some challenges—especially if your IT staff isn’t familiar with virtualization techniques and practices. Here are some best practices for managing VMs efficiently.

Reduce VM sprawl through self-service management – As mentioned previously in this article, it’s very easy to create VMs and even easier to forget about them once they’re no longer active. By relying on self-service VM management, you put the responsibility for deleting those unused VMs in the hands of the end user. Self-service means they have to request their own VMs, which makes it easier for them to manage (and remove) them.

Use templates to right-size VMs – It can be a temptation to create VMs with more resources than they really need. Simply adding CPUs, for instance, won’t necessarily lead to better performance—but it often does lead to wasted resources. Creating VM templates for specific functions can help reduce the tendency to overprovision.

Find the right tools to help monitor performance - The tools that come with your hypervisor and virtualization platform can give you good insight into VM performance. But as environments scale and grow larger, you’ll need more robust tools that can give a deeper view into VMs that aren’t being used as well as information about deployment effectiveness and overall performance.

Provide the appropriate permissions to maintain VM security – In a virtualized environment, IT can delegate management tasks to other users—but it’s important they’re the right users. Make sure you are able to set up a hierarchy that shows which parts of your infrastructure require which permissions. You’ll want to enable simple assignment of permissions as well as equally simple revocation of permission when needed.

Enable remote access through VPN and multifactor authentication – As hybrid work models have led to more people working from home, it’s important to have a good way to access the virtualized environment remotely. A VPN connection with multifactor authentication is recommended.

Use a backup and restore platform designed for VMs – When backing up a host server with VMs, be sure to choose a backup platform that gives you the ability to restore individual VM files.

Nutanix and server virtualization

Nutanix understands server virtualization and how it enables and enhances an organization’s ability to work productively. We have a range of virtualization tools and solutions designed to simplify the entire process, from deployment to day-to-day management.

With Nutanix AHV, you can enjoy all the benefits of virtualization without compromise. Built for today’s hybrid cloud environments, AHV makes the deployment and management of VMs and containers easy and intuitive. With self-healing security and automated data protection with disaster recovery, rich analytics, and more, it’s everything you need without the cost and complexity.

Explore our top resources

Are You Still Paying for Virtualization?

Nutanix AHV: Security at the Virtualization Layer