What is virtualization?

Virtualization, as the name implies, creates a virtual version of a once-physical item. In a datacenter, the most commonly virtualized items include operating systems, servers, storage devices, or desktops. With virtualization, technologies like applications and operating systems are abstracted away from the hardware or software beneath them. Hardware virtualization involves virtual machines (VMs), which take the place of a “real” computer with a “real” operating system.

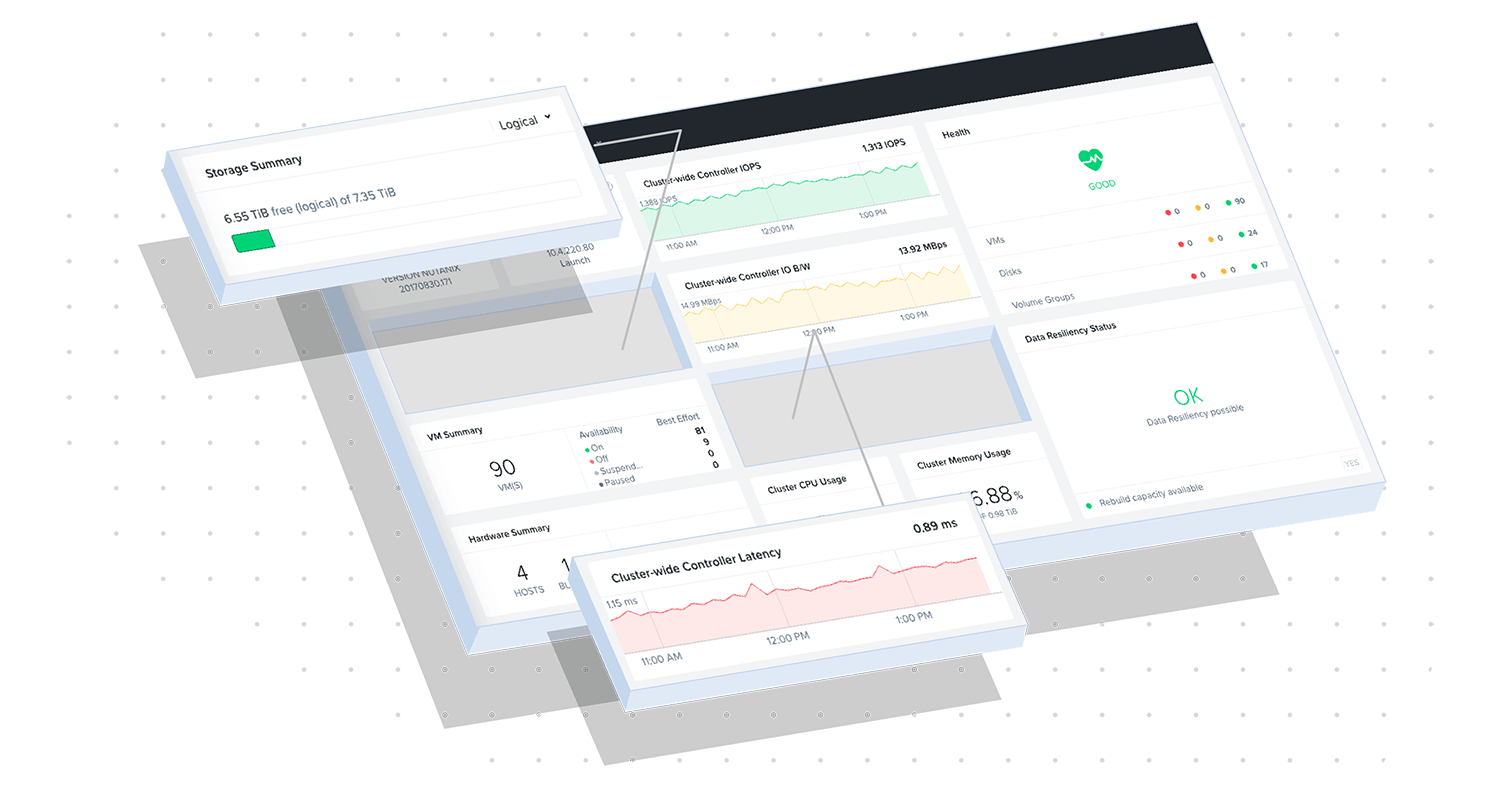

Mission Control

Mission Control gives you everything you need for success with AHV Virtualization, from migration and day-one basics to advanced objectives.

The benefits of virtualization

Put simply, virtualization solutions streamline your enterprise datacenter. It abstracts away the complexity in deploying and administering a virtualized solution, while providing the flexibility needed in the modern datacenter.

Not to mention, virtualization can help create a “greener” IT environment by reducing costs on power, cooling, and hardware. But cost savings aren’t the only advantage of opting for virtualized solutions. Here are more reasons organizations are going virtual:

Minimize Servers - Virtualization minimizes the amount of servers an organization needs, letting them cut down on heat buildup associated with a server-heavy datacenter. The less physical “clutter” your datacenter has, the less money and research you need to funnel into heat dissipation.

Reduce Hardware - When it comes to saving money, minimizing hardware is key. With virtualization, organizations are able to reduce their hardware usage, and most importantly, reduce maintenance, downtime, and electricity overtime.

Quick Redeployments - Virtualization makes redeploying a new server simple and quick. Should a server die, virtual machine snapshots can come to the rescue within minutes.

Simpler Backups - Backups are far simpler with virtualization. Your virtual machine can perform backups and take snapshots throughout the day, so you always have the most current data available. Plus, you can move your VMs between servers, and they can be redeployed quicker.

Reduce Costs & Carbon Footprint - As you virtualize more of your datacenter, you’re inevitably reducing your datacenter footprint—and your carbon footprint as a whole. On top of supporting the planet, reducing your datacenter footprint also cuts down dramatically on hardware, power, and cooling costs.

Better Testing - You’re better equipped to test and re-test in a virtualized environment than a hardware-driven one. Because VMs keep snapshots, you can revert to a previous one should you make an error during testing.

Run Any Machine on Any Hardware - Virtualization provides an abstraction layer between software and hardware. In other words, VMs are hardware-agnostic, so you can run any machine on any hardware. As a result, you don’t have the tie-down associated with vendor lock-in.

Effective Disaster Recovery - When your datacenter relies on virtual instances, disaster recovery is far less painful, and you end up facing much shorter, infrequent downtime. You can use recent snapshots to get your VMs up and running, or you may choose to move those machines elsewhere.

Cloudify Your Datacenter - Virtualization can help you “cloudify” your datacenter. A fully or mostly virtualized environment mimics that of the cloud, getting you set up for the switch to cloud. In addition, you can choose to deploy your VMs in the cloud.

How does virtualization work?

One of the main reasons businesses use virtualization technology is server virtualization, which uses a hypervisor to “duplicate” the hardware underneath. In a non-virtualized environment, the guest operating system (OS) normally works in conjunction with the hardware. When virtualized, the OS still runs as if it's on hardware, letting companies enjoy much of the same performance they expect without hardware. Though the hardware performance vs. virtualized performance isn’t always equal, virtualization still works and is preferable since most guest operating systems don’t need complete access to hardware.

As a result, businesses can enjoy better flexibility and control and eliminate any dependency on a single piece of hardware. Because of its success with server virtualization, virtualization has spread to other areas of the datacenter, including applications, networks, data, and desktops.

Virtual Machines

Virtual machines (VMs) are a key component of virtualization technology. Virtualization refers to the creation of a virtual version of a computing resource, such as a server, storage device, operating system, or network, rather than relying on a physical resource and virtual machines are an emulation of a computer system. The underlying hardware is copied by a hypervisor to run multiple operating systems. While VMs have been around for 50 years, they are now becoming more popular with the advance of the remote workforce and end-user computing. Some popular virtualization stacks and hypervisors include VMware vSphere with ESXi, Microsoft Windows Server 2016 with Hyper-V, Nutanix Acropolis with AHV, Citrix XenServer, and Oracle VM.

Hypervisor

Hypervisors play a crucial role in virtualization as they are the software layer that enables the creation and management of virtual machines (VMs) on physical hardware. A hypervisor is a software that abstracts and isolates hardware and operating systems into virtual machines with their own memory, storage, CPU power, and network bandwidth. Another key function of hypervisors is isolating the VMs from one another and handles communications between all the VMs.

A hypervisor is composed of three parts that work together to model the hardware:

Dispatcher: Tells the VM what to do

Allocator: allocates system resources

Interpreter: instructions that are executed

Types of virtualization

Data virtualization

Data virtualization is a type of data management that integrates data from multiple applications and physical locations for use without the need for data replication or movement. It creates a single, virtual abstract layer that connects to different databases for virtual views of the data.

Server virtualization

Server virtualization is creating multiple instances of one server. These instances represent one virtual environment. Within each virtual environment is a separate operating system that can run on their own. This allows one operating machine to do the work of many machines, eliminating the need for data sprawl and saves on operating expenses.

Operating system virtualization

Operating system virtualization is similar to server virtualization. The host operating system is reconfigured to operate multiple isolated operating systems such as Linux and Windows on one machine that allows multiple users to work from it in different applications at the same time. This is also known as operating system-level virtualization.

Desktop virtualization

Desktop virtualization is a type of software that separates the main desktop environment from other devices that use it. This saves time and IT resources as one desktop environment is deployed onto many machines at once. This also makes it easier to deploy updates, fix systems and add security protocols across virtual desktops at once.

Network virtualization

Network virtualization combines network hardware and software functionality into a single entity. Often combined with resource virtualization, it combines multiple resources that are then split up into separate segments and assigned to the devices or servers that need them. This type of virtualization improves network speed, scalability, and reliability.

Storage virtualization

Storage virtualization is taking storage resources from multiple smaller devices and combining them into one large storage device. Administrators can use this storage as needed through a single, central console via virtual machines and physical servers. To do this, the software takes storage requests and determines which device has the capacity to use it as needed.

Benefits of virtualization for organizations

Organizations hoping to pursue a more cloud-like IT environment will need to set their sights on virtualization first. Virtualizing your datacenter helps you use your server resources far more efficiently. In the past, businesses would have to dedicate one application—such as email—on a single server. In those cases, businesses would either over-accumulate multiple servers to take on their multiple applications, or they’d face a different issue altogether: Resources being underused on an entire server.

Either way, this method is costly, space-consuming, and inefficient. Thanks to virtual solutions, IT teams can run multiple applications, workloads, and operating systems on just a single virtual machine, and resources can be added and removed as needed. Virtualization scales easily with businesses. As demands rise and fall, it helps organizations stay on top of their resource utilization and respond faster as changes arise.

Virtualization security

Depending on the way a system is configured, virtualization is no less secure than physical components and systems. Virtualization solves some security challenges and vulnerabilities posed by physical systems, but it also creates new challenges and potential risks. That’s why it’s important to be aware of both security benefits and risks of virtualization so you can configure systems to offer the right kind of protection for the systems you’re using. As long as you are familiar with the potential security issues of physical and virtualized systems, you can implement the right solutions that will help mitigate those risks.

Some common security issues that come with virtualization include:

- Attacks on host servers – While virtual machines (VMs) are isolated from each other even when they reside on the same server (more on that below), if attackers get into your host-level server, they could theoretically access all the VMs that host controls. They could even create an admin account that gives them authorization to delete or gather important corporate information.

- VM snapshots – Snapshots are images of VMs that represent a specific moment in time. They’re used most often for data protection, as a backup for disaster recovery. Because the state of the VM is always changing, snapshots are meant to be temporary, short-lived records. Keeping them stored somewhere over a long time period could make them vulnerable to attackers — who could gather a lot of proprietary data from them.

- File sharing between host server and VMs – Typically, default settings prevent file sharing between the host server and VMs, as well as copy-paste between VMs and remote management dashboards. If those defaults are overridden, an attacker that hacks into the management system could copy confidential data from the VMs or even use management capabilities to infect the VMs with malware.

- VM sprawl – Because it’s so quick and easy to spin up VMs whenever they’re needed — to use in testing during development, for instance — they are also easy to forget about once testing is over. Over time, an organization could have many unused VMs that exist unknown on the system, which means they don’t get patched or updated. An attacker can easily gain access to a forgotten VM and find further entry into the system from that vantage point.

- Ransomware, viruses, and other malware – Just like physical systems, VMs are vulnerable to viruses, ransomware, and malware. Regular backups are a must in today’s ever-evolving threat landscape.

When considering what type of security solutions and capabilities you need for your virtualized systems, here are a few factors to keep in mind.

Hypervisor security

According to an article published in 2022, more than a third (35%) of security risks in server virtualization are related to hypervisors. The hypervisor is the program that runs VMs on a server, and it enables IT to deploy VMs and optimize the use of computing, networking, and storage resources as needed. As the controller of multiple VMs, hypervisors are particularly attractive to attackers. If an attacker gains access to a hypervisor, the operating system and all the VMs — including the data and applications they contain — on that server could be at risk.

Best practices for keeping hypervisors secure include:

- Staying on top of updates – While most hypervisors have automated features that check periodically for updates, it’s not a bad idea to manually check occasionally as well.

- Using thin, or bare metal hypervisors – These hypervisors are generally more secure than other types because the control features are abstracted from the operating system, which is the most attack-prone element on a server. If the OS is attacked, the attacker still doesn’t have access to the hypervisor.

- Avoiding use of network interface cards (NICs) – It’s always a good practice to limit the use of physical hardware that connects to the host server and hypervisor. If you must use a NIC, be sure to disconnect it when it’s not in use. That ensures there is one fewer possible entry point for attackers.

- Turning off non-required services – Any program that connects guests with the host OS should be disabled once it’s no longer in use. An example of this would be file sharing between the host and other users.

- Mandating security features on guests OSes – Any OS that interfaces with the hypervisor should be required to have a certain level of security capabilities, such as firewalls.

VM isolation

One benefit of virtualization is that one server can host multiple VMs — while isolating each VM from the others so it doesn’t even detect that the others exist. This can be a security benefit as well, because if an attacker gains entry into a VM, it won’t be able to automatically get into the other VMs on that server. This VM isolation even applies to system admin roles. It’s still important to protect each VM just as you would protect physical machines, using solutions such as data encryption, antivirus applications, firewalls, etc.

Isolating all the hosted elements of a VM is important too. Hide elements that aren’t necessary for user inteface in subnetworks so their addresses aren’t readily visible or available to potential attackers.

Host security

To reduce the risk of a successful attack on the host server, you can define exact percentages of resource usage and limitations. For instance, configuring a VM to always get at least 15% of available computing resources, but not more than 25%. That way, a VM under attack — such as a denial of service (DoS) attack — is prevented from using up so many resources that the other VMs on the server are negatively affected.

VM security

As stated above, you can (and should) protect each VM with firewalls, antivirus, encryption, and other security solutions to keep them protected. An unprotected VM can be used by an attacker to gain entry into the system and scan public and private address spaces. Any other unprotected VM on that host can be attacked easily as well.

VM escape attacks

VM escape is a common system exploit that attackers use to gain access to the hypervisor from a VM. To do this, the attacker unleashes a code on the vulnerable VM that tells a rogue operating system contained inside to leave (or escape) the VM’s confines and interface directly with the hypervisor. With VM escape attacks, malicious actors can gain access to the host server’s OS and the other VMs on the host. Experts recommend the following to minimize risk of VM escape attacks:

- Update and patch VM software.

- Share resources only when absolutely mandatory. Limit sharing wherever possible, and turn it off when not needed.

- Limit software installation because each new app brings new vulnerabilities.

Shared resources

As stated previously, sharing resources between VMs and/or guests creates vulnerabilities in the system. Shared folders or files are attractive targets for attackers that gain entry into the system via a compromised guest. Turn sharing off when not necessary. Also disable copy-pasting capabilities between the host and remote users.

Management interfaces

Some experts recommend creating a separation between management APIs to protect the network. They say you should separate not only infrastructure management apps but also orchestration capabilities from services. APIs are often a target of attackers because they are mini control programs.

When virtualizing machines in the cloud, it’s important to comply as closely as possible with the Virtual Network Functions Manager (VNFM) regulation created by the ETSI Network Functions Virtualisation Industry Specification Group. The regulation mandates certain security standards for APIs that interface with infrastructure and orchestration tools.

Virtualization vs containerization

Virtualization and containerization are related concepts but represent different approaches to managing and deploying applications and computing resources.

Virtualization abstracts an operating system, data, and applications from the physical hardware and divides up compute, network, and storage resources between multiple VMs. Containers are sometimes described as a subset of virtualization — or OS-level virtualization. Containers virtualize software layers above the OS level, while VMs virtualize a complete machine that includes the hardware layers.

Just as each VM is isolated from the other VMs and behaves as if it were the only machine on the system, containerized applications also act as if they were the only apps on the system. The apps can’t see each other and are protected from each other, just as VMs are. So VMs and containers are similar in that they isolate resources and allocate them from a common pool.

One of the biggest differences between VMs and containers is simply scope. VMs are made up of a complete operating system, plus data and applications and all the related libraries and other resources. A VM can be dozens of gigabytes in size. Containers, on the other hand, only include an application and its related dependencies. Multiple containers share a common OS and other control features. Many isolated containers can run individually on a host OS.

The following is a summary of key differences between VMs and containers:

Isolation – While VMs’ operating systems are isolated from each other, containers share an OS. That means if the host OS is compromised, all containers are at risk as well.

Underlying OS – VMs each have their own OSes, while containers share a common one.

Platform support – VMs can run practically any OS on the host server. For instance, you can have 10 VMs that run Windows and 10 VMs on the same server that run Linux. Because containers share a common OS, they must align with that OS — meaning, Linux containers have to run on Linus, and Windows on Windows.

Deployment – Each VM has a separate hypervisor that controls its functions and resource allocations. Containers are deployed by container applications, such as Docker, and a group of containers needs an orchestration app, such as Kubernetes.

Storage – VMs have separate virtual hard disks or a server message block (SMB) if they share a single pool of storage resources between multiple servers. Containers used the host server’s physical hard disk for storage.

Load balancing – Virtualization relies on failover clusters for load balancing. Failover clusters are groups of connected servers or nodes that provide redundancy if one of the machines fails. Containers rely on the overarching orchestration application, such as Kubernetes, to balance resources and loads.

Virtual networks – VMs are networked via virtual network adaptors (VNAs) that are all connected to a master NIC. With containers, a VNA is broken down into many separate views for a lightweight form of networking.

Despite their differences, it’s possible to combine the use of containers and VMs. You can run containers within a VM to experiment with system-on-chip (SoC) deployments, for instance, and try them out on a virtual machine before trying it with the physical hardware.

Most of the time, however, organizations primarily use one or the other. It all depends on the resources you need, individual use cases, and preference for the trade-offs.

Virtualization in cloud computing

Much like virtualization vs. containerization, virtualization and cloud computing are related in some ways but not the same thing.

Virtualization in the cloud creates an abstracted ecosystem of a server operating system and storage devices. It allows people to use separate VMs that share a physical instance of a specific resource, whether networking, compute, or storage. Cloud virtualization makes workload management significantly more scalable, cost-effective, and efficient. In fact, virtualization is one of the elements that makes cloud computing work.

With cloud computing, you store data and applications in the cloud but use virtualization to allow users to share common infrastructure. While cloud service providers such as Amazon or Azure handle the physical servers, resources, and other hardware, organizations can use virtualization in concert with cloud computing to keep costs down.

Benefits of cloud virtualization include:

- Efficient, flexible resource allocation

- More productive development ecosystem

- Reduced IT infrastructure costs

- Remote access to data and apps

- Fast, simple scalability

- Cost-effective pay-per-use IT infrastructure

- Support for simultaneous OSes on a single server

The evolution of virtualization: history and future

Decades ago, operating system (OS) virtualization technology was born. In this form, software is used to let hardware run multiple operating systems simultaneously. Started on mainframes, this technology enabled IT administrators to avoid spending too much costly processing power.

Beginning in the 1960s, virtualization and virtual machines (VMs) started on just a couple of mainframes, which were large, clunky pieces with time-sharing capabilities. Most notable among these machines was IBM 360/67, which became a staple in the world of mainframes in the 1970s. It wasn’t long before VMs entered the heart of personal computers in the 1980s.

But mainstream virtualization adoption didn’t begin until the late ‘80s and early ‘90s. While some VMs like those on IBM’s mainframes are still used today, they’re not nearly as popular, and few companies regard mainframes as a business staple. The first business to make VMs mainstream was Insignia Solutions, who created a SoftPC, an x86-based software emulator. This success inspired more organizations—namely Apple, Nutanix, and later, Citrix—to come out with their own virtualization products.

Explore our top resources

Are You Still Paying for Virtualization?

Nutanix AHV: Security at the Virtualization Layer

End-User Computing for Dummies

Related products and solutions

AHV Virtualization

Nutanix AHV is a modern and secure virtualization platform that powers VMs and containers for applications and cloud-native workloads on-premises and in public clouds.

Virtual Desktop Infrastructure

Nutanix VDI is a complete software stack to unify your hybrid cloud infrastructure including compute, storage and network, and hypervisors, in public or private clouds.

Nutanix Cloud Infrastructure

Powerful and secure hyperconverged infrastructure for applications and data at any scale, on any cloud.